VB Transform 2024 returns this July! Over 400 enterprise leaders will gather in San Francisco from July 9-11 to dive into the advancement of GenAI strategies and engaging in thought-provoking discussions within the community. Find out how you can attend here.

Recent headlines, such as an AI suggesting people should eat rocks or the creation of ‘Miss AI,’ the first beauty contest with AI-generated contestants, have reignited debates about the responsible development and deployment of AI. The former is likely a flaw to be resolved, while the latter reveals human nature’s flaws in valuing a specific beauty standard. In a time of repeated warnings of AI-led doom –— the latest personal warning from an AI researcher pegging the probability at 70%! — these are what rise to the top of the current list of worries and neither suggests more than business as usual.

There have, of course, been egregious examples of harm from AI tools such as deepfakes used for financial scams or portraying innocents in nude images. However, these deepfakes are created at the direction of nefarious humans and not led by AI. In addition, there are worries that the application of AI may eliminate a significant number of jobs, although so far this has yet to materialize.

In fact, there is a long list of potential risks from AI technology, including that it is being weaponized, encodes societal biases, can lead to privacy violations and that we remain challenged in being able to explain how it works. However, there is no evidence yet that AI on its own is out to harm or kill us.

Nevertheless, this lack of evidence did not stop 13 current and former employees of leading AI providers from issuing a whistleblowing letter warning that the technology poses grave risks to humanity, including significant death. The whistleblowers include experts who have worked closely with cutting-edge AI systems, adding weight to their concerns. We have heard this before, including from AI researcher Eliezer Yudkowsky, who worries that ChatGPT points towards a near future when AI “gets to smarter-than-human intelligence” and kills everyone.

VB Transform 2024 Registration is Open

Join enterprise leaders in San Francisco from July 9 to 11 for our flagship AI event. Connect with peers, explore the opportunities and challenges of Generative AI, and learn how to integrate AI applications into your industry. Register Now

Even so, as Casey Newton pointed out about the letter in Platformer: “Anyone looking for jaw-dropping allegations from the whistleblowers will likely leave disappointed.” He noted this might be because said whistleblowers are forbidden by their employers to blow the whistle. Or it could be that there is scant evidence beyond sci-fi narratives to support the worries. We just don’t know.

Getting smarter all the time

What we do know is that “frontier” generative AI models continue to get smarter, as measured by standardized testing benchmarks. However, it is possible these results are skewed by “overfitting,” when a model performs well on training data but poorly on new, unseen data. In one example, claims of 90th-percentile performance on the Uniform Bar Exam were shown to be overinflated.

Even so, due to dramatic gains in capabilities over the last several years in scaling these models with more parameters trained on larger datasets, it is largely accepted that this growth path will lead to even smarter models in the next year or two.

What’s more, many leading AI researchers, including Geoffrey Hinton (often called an ‘AI godfather’ for his pioneering work in neural networks), believes artificial general intelligence (AGI) could be achieved within five years. AGI is thought to be an AI system that can match or exceed human-level intelligence across most cognitive tasks and domains, and the point at which the existential worries could be realized. Hinton’s viewpoint is significant, not only because he has been instrumental in building the technology powering gen AI, but because — until recently — he thought the possibility of AGI was decades into the future.

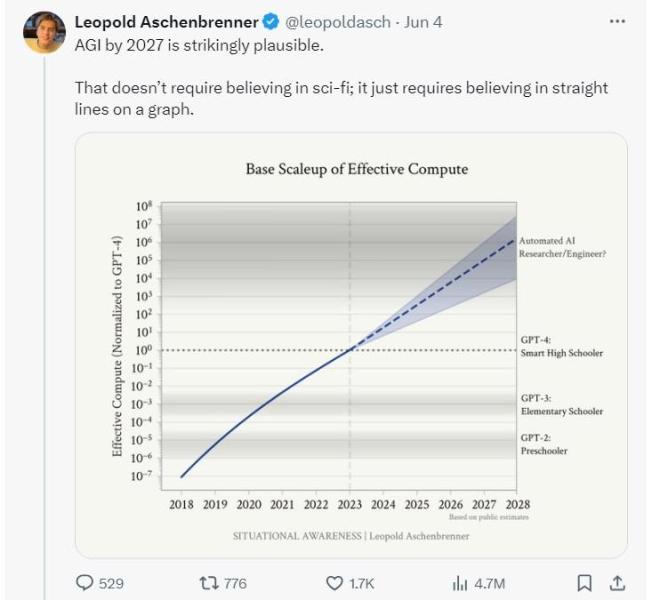

Leopold Aschenbrenner, a former OpenAI researcher on the superalignment team who was fired for allegedly leaking information, recently published a chart showing that AGI is achievable by 2027. This conclusion assumes that progress will continue in a straight line, up and to the right. If correct, this adds credence to claims AGI could be achieved in five years or less.

Another AI winter?

Although not everyone agrees that gen AI will achieve these heights. It seems likely that the next generation of tools (GPT-5 from OpenAI and the next iteration of Claude and Gemini) will make impressive gains. That said, similar progress beyond the next generation is not guaranteed. If technological advances level out, worries about existential threats to humanity could be moot.

AI influencer Gary Marcus has long questioned the scalability of these models. He now speculates that instead of witnessing early signs of AGI, we are instead now seeing early signs of a new “AI Winter.” Historically, AI has experienced several “winters,” such as the periods in the 1970s and late 1980s when interest and funding in AI research dramatically declined due to unmet expectations. This phenomenon typically arises after a period of heightened expectations and hype surrounding AI’s potential, which ultimately leads to disillusionment and criticism when the technology fails to deliver on overly ambitious promises.

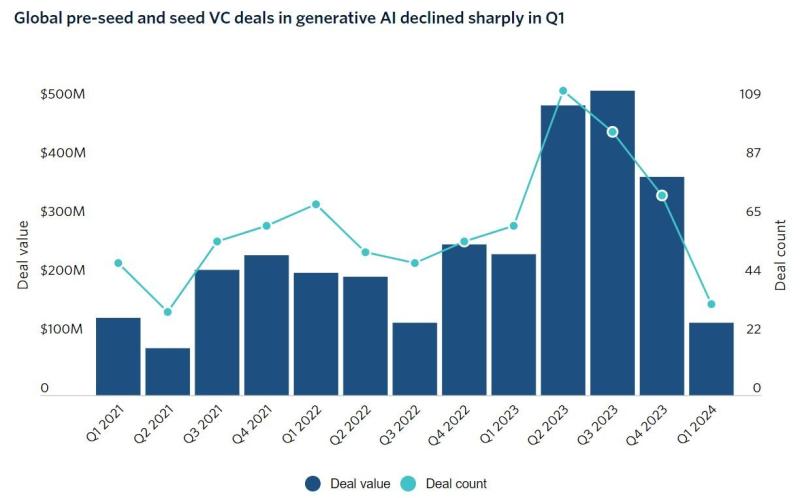

It remains to be seen if such disillusionment is underway, but it is possible. Marcus points to a recent story reported by Pitchbook that states: “Even with AI, what goes up must eventually come down. For two consecutive quarters, generative AI dealmaking at the earliest stages has declined, dropping 76% from its peak in Q3 2023 as wary investors sit back and reassess following the initial flurry of capital into the space.”

This decline in investment deals and size may mean that existing companies will become cash starved before substantial revenues appear, forcing them to reduce or cease operation, and it could limit the number of new companies and new ideas entering the marketplace. Although it is unlikely this would have any impact on the largest firms developing frontier AI models.

Adding to this trend is a Fast Company story that claims there is “little evidence that the [AI] technology is broadly unleashing enough new productivity to push up company earnings or lift stock prices.” Consequently, the article opines that the threat of a new AI Winter may dominate the AI conversation in the latter half of 2024.

Full speed ahead

Nevertheless, the prevailing wisdom might be best captured by Gartner when they state: “Similar to the introduction of the internet, the printing press or even electricity, AI is having an impact on society. It is just about to transform society as a whole. The age of AI has arrived. Advancement in AI cannot be stopped or even slowed down.”

The comparison of AI to the printing press and electricity underscores the transformative potential many believe AI holds, driving continued investment and development. This viewpoint also explains why so many are all-in on AI. Ethan Mollick, a professor at Wharton Business School, said recently on a Tech at Work podcast from Harvard Business Review that work teams should bring gen AI into everything they do — right now.

In his One Useful Thing blog, Mollick points to recent evidence showing how far advanced gen AI models have become. For example: “If you debate with an AI, they are 87% more likely to persuade you to their assigned viewpoint than if you debate with an average human.” He also cited a study that showed an AI model outperforming humans for providing emotional support. Specifically, the research focused on the skill of reframing negative situations to reduce negative emotions, also known as cognitive reappraisal. The bot outperformed humans on three of the four examined metrics.

The horns of a dilemma

The underlying question behind this conversation is whether AI will solve some of our greatest challenges or if it will ultimately destroy humanity. Most likely, there will be a blend of magical gains and regrettable harm emanating from advanced AI. The simple answer is that nobody knows.

Perhaps in keeping with the broader zeitgeist, never has the promise of technological progress been so polarized. Even tech billionaires, presumably those with more insight than everyone else, are divided. Figures like Elon Musk and Mark Zuckerberg have publicly clashed over AI’s potential risks and benefits. What is clear is that the doomsday debate is not going away, nor is it close to resolution.

My own probability of doom “P(doom)” remains low. I took the position a year ago that my P(doom) is ~ 5% and I stand by that. While the worries are legitimate, I find recent developments on the AI safe front encouraging.

Most notably, Anthropic has made progress has been made on explaining how LLMs work. Researchers there recently been able to look inside Claude 3 and identify which combinations of its artificial neurons evoke specific concepts, or “features.” As Steven Levy noted in Wired, “Work like this has potentially huge implications for AI safety: If you can figure out where danger lurks inside an LLM, you are presumably better equipped to stop it.”

Ultimately, the future of AI remains uncertain, poised between unprecedented opportunity and significant risk. Informed dialogue, ethical development and proactive oversight are crucial to ensuring AI benefits society. The dreams of many for a world of abundance and leisure could be realized, or they could turn into a nightmarish hellscape. Responsible AI development with clear ethical principles, rigorous safety testing, human oversight and robust control measures is essential to navigate this rapidly evolving landscape.

Gary Grossman is EVP of technology practice at Edelman and global lead of the Edelman AI Center of Excellence.

DataDecisionMakers

Welcome to the VentureBeat community!

DataDecisionMakers is where experts, including the technical people doing data work, can share data-related insights and innovation.

If you want to read about cutting-edge ideas and up-to-date information, best practices, and the future of data and data tech, join us at DataDecisionMakers.

You might even consider contributing an article of your own!

Source link