Join us in returning to NYC on June 5th to collaborate with executive leaders in exploring comprehensive methods for auditing AI models regarding bias, performance, and ethical compliance across diverse organizations. Find out how you can attend here.

Should you add glue to your pizza, stare directly at the sun for 30 minutes per day, eat rocks or a poisonous mushroom, treat a snake bite with ice, and jump off the Golden Gate Bridge?

According to information served up through Google Search’s new “AI Overview” feature, these obviously stupid and harmful suggestions are not only good ideas, but the top of all possible results a user should see when searching with its signature product.

What is going on, where did all this bad information come from, and why is Google putting at the top of its search results pages right now? Let’s dive in.

What is Google AI Overview?

In its bid to catch-up to rival OpenAI and its hit chatbot ChatGPT in the large language model (LLM) chatbot and search game, Google introduced a new feature called “Search Generative Experience” nearly a year ago in May 2023.

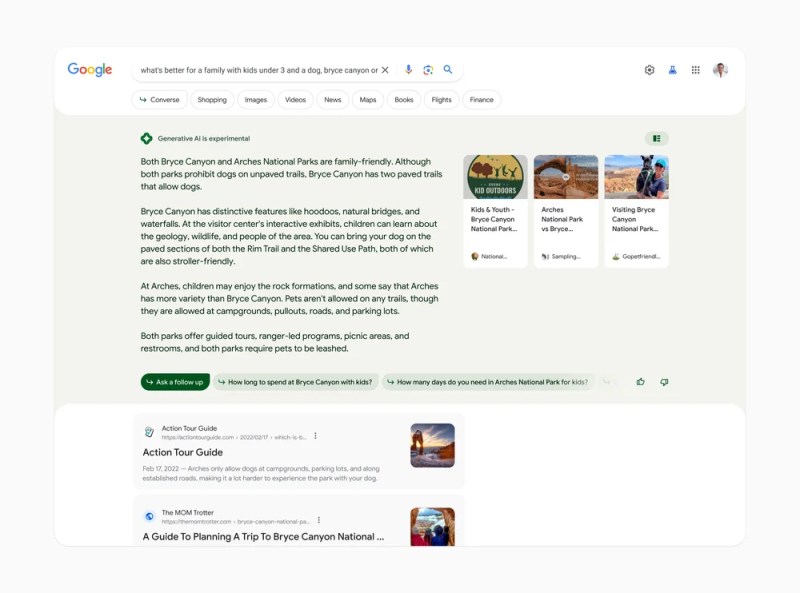

It was described then as: “an AI-powered snapshot of key information to consider, with links to dig deeper,” and appeared basically as a new paragraph of text right below the Google Search input bar, above the traditional list of blue links the user typically got when searching Google.

The feature was said to be powered by Search-specific AI models. At the time, it was an “opt-in” service and users had to go through a number of hoops to turn it on.

But 10 days ago at Google’s I/O conference, amid a fleet of AI-related announcements, the company announced the Search Generative Experience had been renamed AI Overviews and was coming as the default experience on Google Search to all users, beginning with those in the U.S.

There are ways to turn it off or perform Google Searches without AI Overviews (namely the “Web” tab on Google Search), but now, in this case, users have to take a few extra steps to do so.

Why is Google AI Overview controversial?

Ever since Google turned AI Overviews on as the default for users in the U.S., some have been taking to X and other social sites to post the horrible, awful, no-good results that come up in the feature when searching different queries.

In some cases, the AI-powered feature chooses to display wildly incorrect, inflammatory and downright dangerous information.

Even celebrities including musician Lil Nas X have joined in the pile-on:

Other results are more harmless but still incorrect and make Google look stupid and unreliable:

The poor quality AI generated results have taken on a life of their own and even become a meme with some users photoshopping answers into screenshots to make Google look even worse that it already does in the real results:

Google has qualified the AI Overview feature as being “experimental,” putting the following text at the bottom of each result: “Generative AI is experimental,” and linking to a page that describes the new feature in more detail.

On that page, Google writes: “AI Overviews can take the work out of searching by providing an AI-generated snapshot with key information and links to dig deeper…With user feedback and human reviews, we evaluate and improve the quality of our results and products responsibly.”

Will Google pull AI/Overview?

But some users took to X (formerly Twitter) to call upon Google or predict that the search giant would end up removing the AI Overview feature, at least temporarily, similar to the tack taken by Google after its Gemini AI image generation feature was shown to create racially and historically inaccurate images earlier this year, inflaming prominent Silicon Valley libertarians and politically conservative figures such as Marc Andreessen and Elon Musk.

In a statement to The Verge, a Google spokesperson said of the AI Overview feature that users were showing examples “generally very uncommon queries, and aren’t representative of most people’s experiences.”

In addition, The Verge reported that: “The company has taken action against violations of its policies…and are using these ‘isolated examples’ to continue to refine the product.”

Yet as some have observed on X, this sounds an awful lot like victim blaming.

Others have posited that AI developers could be held legally liable for dangerous results such as the kind shown in AI Overview:

Importantly, tech journalists and other digitally literate users have noted that Google appears to be using its AI models to create summaries of content it has indexed in its Search index previously, content it did not originate but is nonetheless relying upon to provide its users with “key information.”

Ultimately, it’s hard to say what percentage of searches display this erroneous information.

But one thing is clear: AI Overview seems to be more prone than Google Search was previously to disinformation from untrustworthy sources, or information posted as a joke which the underlying AI models responsible for summarization cannot understand as such, and instead treat as serious.

Now, whether users actually act upon the information provided in these results remains to be seen — but if they do, it is clearly unwise and could pose risks to their health and safety.

Let’s hope users are smart enough to check alternate sources. Say, rival AI search startup Perplexity, which seems to have less problems surfacing correct information than Google’s AI Overviews at the moment (an unfortunate irony for the search giant and its users, given Google’s role in first conceiving of and articulating the machine learning transformer architecture at the heart of the modern generative AI/LLM boom).