AI-generated malware that avoids detection could already be available to nation states, according to the UK’s cybersecurity agency.

To produce such powerful software, threat actors need to train an AI model on “quality exploit data,” the National Cyber Security Centre (NCSC) said today. The resulting system would create new code that evades current security measures.

“There is a realistic possibility that highly capable states have repositories of malware that are large enough to effectively train an AI model for this purpose,” the NCSC warned.

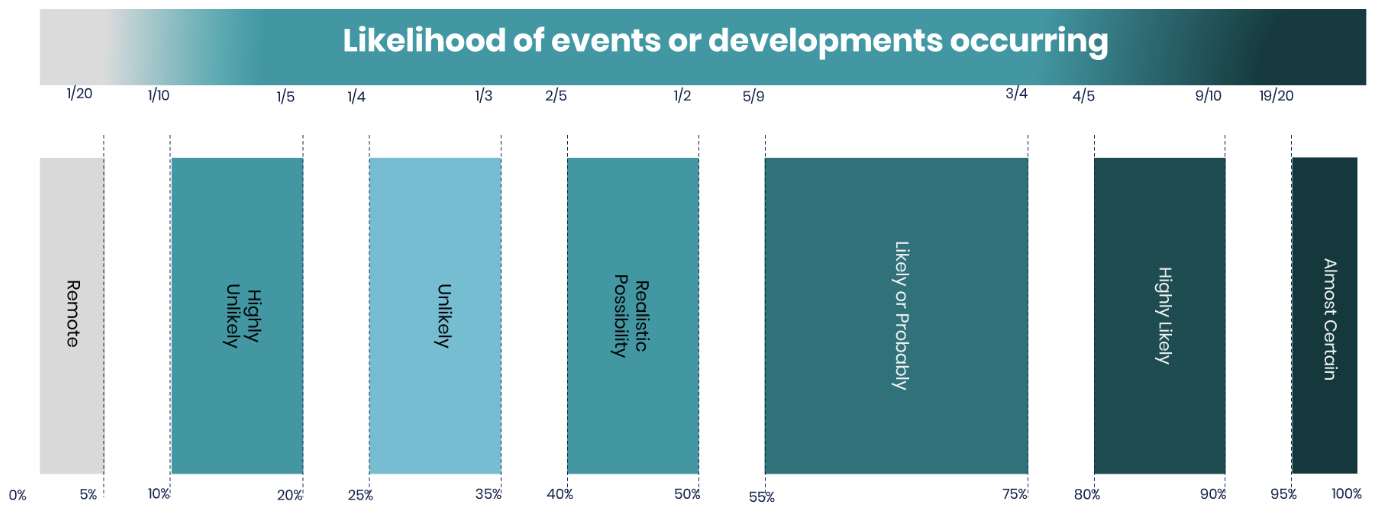

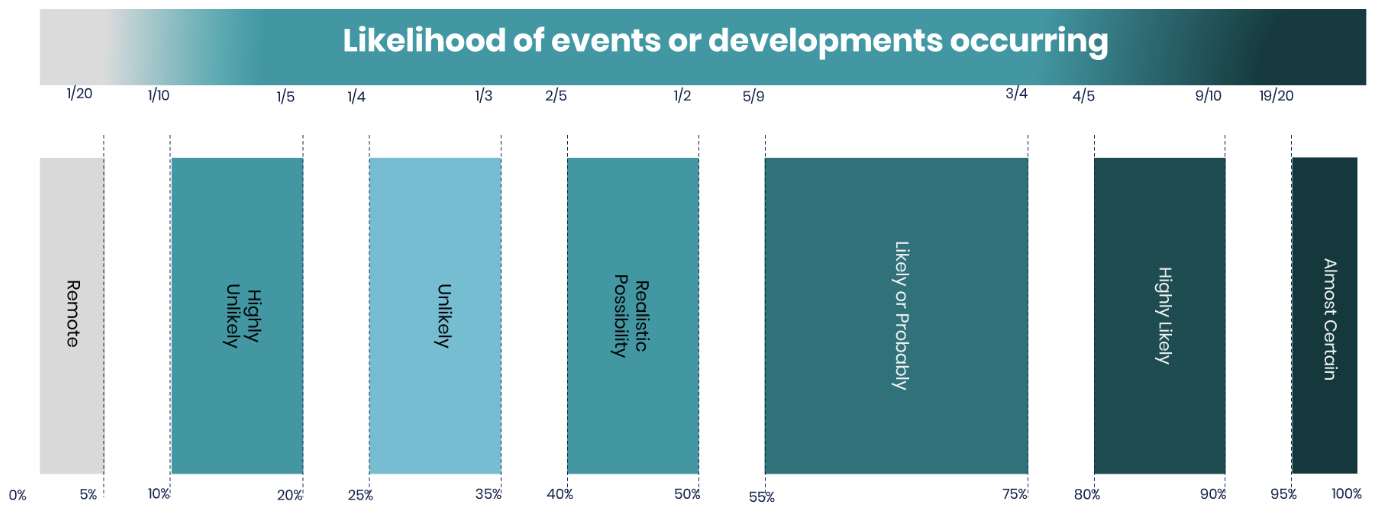

As for what a “realistic possibility” actually means, the agency’s “probability yardstick” offers some clarity.

The warning was part of a flurry of alarm bells rung by the NCSC. The agency expects AI to heighten the global ransomware threat, improve the targeting of victims, and lower the entry barrier for cybercriminals.

Generative AI is also elevating the threats. It’s particularly useful for social engineering techniques, such as convincing interactions with victims and creating lure documents.

GenAI will make it harder to identify phishing, spoofing, and malicious email or password reset requests. But nation states will gain the most powerful weapons.

“Highly capable state actors are almost certainly best placed amongst cyber threat actors to harness the potential of AI in advanced cyber operations,” the agency said.

In the near term, however, artificial intelligence is expected to enhance existing threats, rather than transform the risk landscape. Experts are particularly concerned that it will heighten the global ransomware threat.

“Ransomware continues to be a national security threat,” James Babbage, Director General for Threats at the National Crime Agency, said in a statement.

“As this report shows, the threat is likely to increase in the coming years due to advancements in AI and the exploitation of this technology by cybercriminals.”