Head over to our on-demand library to view sessions from VB Transform 2023. Register Here

Anthropic, the AI safety and research company behind the popular Claude chatbot, has released a new policy detailing its commitment to responsibly scaling AI systems.

The policy, referred to as the Responsible Scaling Policy (RSP), is designed specifically to mitigate “catastrophic risks,” or situations where an AI model could directly cause large-scale devastation.

The RSP is unprecedented and highlights Anthropic’s commitment to reduce the escalating risks linked to increasingly advanced AI models. The policy underscores the potential for AI to prompt significant destruction, referring to scenarios that could lead to “thousands of deaths or hundreds of billions of dollars in damage, directly caused by an AI model, and which would not have occurred in its absence.”

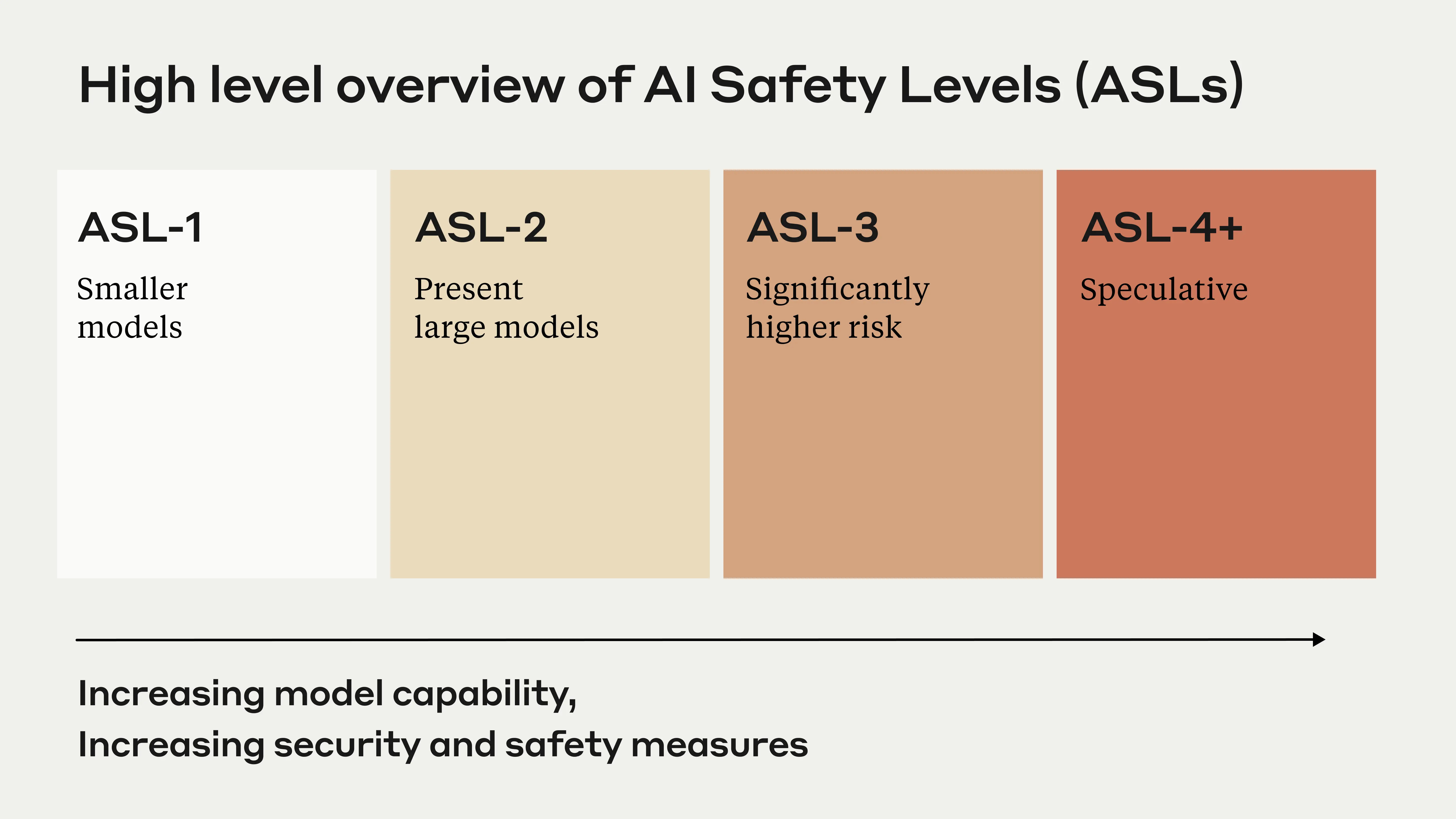

In an exclusive interview with VentureBeat, Anthropic co-founder Sam McCandlish shared some insights into the development of the policy and its potential challenges. At the heart of the policy are AI Safety Levels (ASLs). This risk tiering system, inspired by the U.S. government’s Biosafety Levels for biological research, is designed to reflect and manage the potential risk of different AI systems through appropriate safety evaluation, deployment, and oversight procedures. The policy outlines four ASLs, from ASL-0 (low risk) to ASL-3 (high risk).

Event

VB Transform 2023 On-Demand

Did you miss a session from VB Transform 2023? Register to access the on-demand library for all of our featured sessions.

“There is always some level of arbitrariness in drawing boundaries, but we wanted to roughly reflect different tiers of risk,” McCandlish said. He added that while today’s models might not pose significant risks, Anthropic foresees a future where AI could start introducing real risk. He also acknowledged that the policy is not a static or comprehensive document, but rather a living and evolving one that will be updated and refined as the company learns from its experience and feedback.

The company’s goal is to channel competitive pressures into solving key safety problems so that developing safer, more advanced AI systems unlocks additional capabilities, rather than reckless scaling. However, McCandlish acknowledged the difficulty of comprehensively evaluating risks, given models’ potential to conceal their abilities. “We can never be totally sure we are catching everything, but will certainly aim to,” he said.

The policy also includes measures to ensure independent oversight. All changes to the policy require board approval, a move that McCandlish admits could slow responses to new safety concerns, but is necessary to avoid potential bias. “We have real concern that with us both releasing models and testing them for safety, there is a temptation to make the tests too easy, which is not the outcome we want,” McCandlish said.

The announcement of Anthropic’s RSP comes at a time when the AI industry is facing growing scrutiny and regulation over the safety and ethics of its products and services. Anthropic, which was founded by former members of OpenAI and has received significant funding from Google and other investors, is one of the leading players in the field of AI safety and alignment, and has been praised for its transparency and accountability.

The company’s AI chatbot, Claude, is built to combat harmful prompts by explaining why they are dangerous or misguided. That’s in large part due to the company’s approach, “Constitutional AI,” which involves a set of rules or principles providing the only human oversight. It incorporates both a supervised learning phase and a reinforcement learning phase.

Both the supervised and reinforcement learning methods can leverage chain-of-thought style reasoning to improve the transparency and performance of AI decision making as judged by humans. These methods offer a way to control AI behavior more precisely and with far fewer human labels, demonstrating a significant step forward in crafting ethical and safe AI systems.

The research on Constitutional AI and now the launch of the RSP underlines Anthropic’s commitment to AI safety and ethical considerations. By focusing on minimizing harm while maximizing utility, Anthropic sets a high standard for future advancements in the field of AI.

VentureBeat’s mission is to be a digital town square for technical decision-makers to gain knowledge about transformative enterprise technology and transact. Discover our Briefings.