Join our daily and weekly newsletters for the latest updates and exclusive content on industry-leading AI coverage. Learn More

Enterprise data stacks are notoriously diverse, chaotic and fragmented. With data flowing from multiple sources into complex, multi-cloud platforms and then distributed across varied AI, BI and chatbot applications, managing these ecosystems has become a formidable and time-consuming challenge. Today, Connecty AI, a startup based in San Francisco, emerged from stealth mode with $1.8 million to simplify this complexity with a context-aware approach.

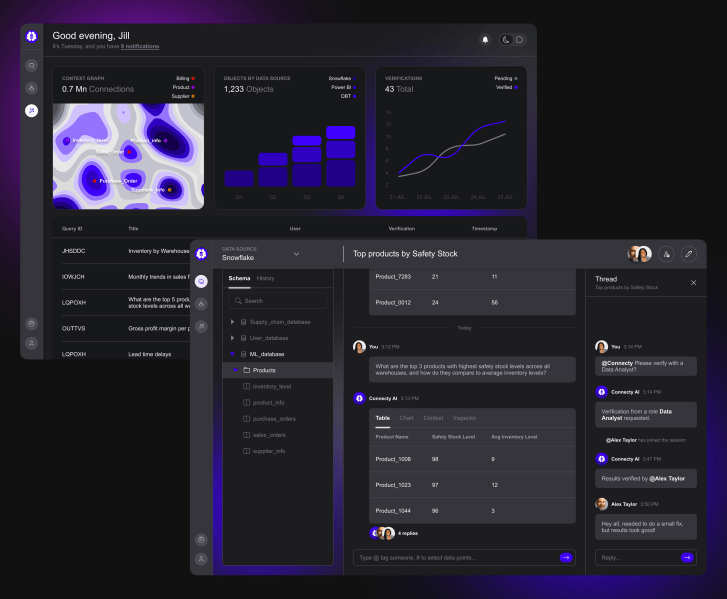

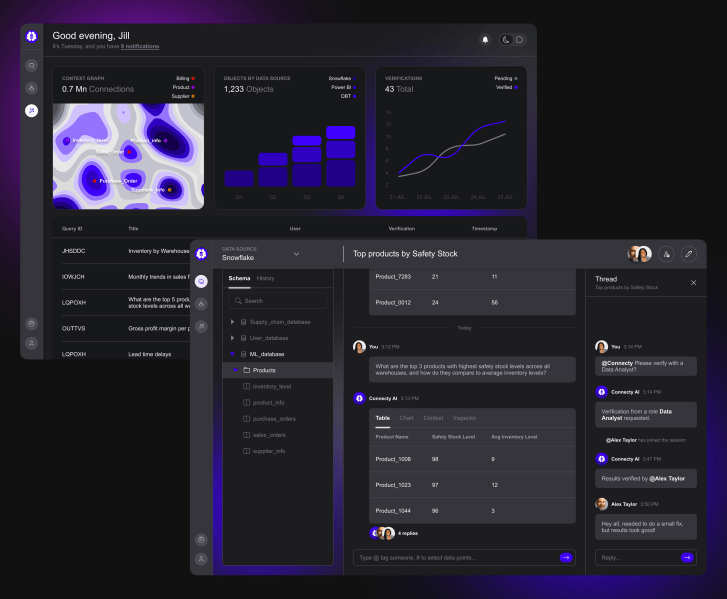

Connecty’s core innovation is a context engine that spans enterprises’ entire horizontal data pipelines—actively analyzing and connecting diverse data sources. By linking the data points, the platform captures a nuanced understanding of what’s going on in the business in real time. This “contextual awareness” powers automated data tasks and ultimately enables accurate, actionable business insights.

While still in its early days, Connecty is already streamlining data tasks for several enterprises. The platform is reducing data teams’ work by up to 80%, executing projects that once took weeks in a matter of minutes.

Connecty bringing order to ‘data chaos’

Even before the age of language models, data chaos was a grim reality.

With structured and unstructured information growing at an unprecedented pace, teams have continuously struggled to keep their fragmented data architectures in order. This has kept their essential business context scattered and data schemas outdated — leading to poorly performing downstream applications. Imagine the case of AI chatbots suffering from hallucinations or BI dashboards providing inaccurate business insights.

Connecty AI founders Aish Agarwal and Peter Wisniewski saw these challenges firsthand in their respective roles in the data value chain and noted that everything boils down to one major issue: grasping nuances of business data spread across pipelines. Essentially, teams had to do a lot of manual work for data preparation, mapping, exploratory data analysis and data model preparation.

To fix this, the duo started working on the startup and the context engine that sits at its heart.

“The core of our solution is the proprietary context engine that in real-time extracts, connects, updates, and enriches data from diverse sources (via no-code integrations), which includes human-in-the-loop feedback to fine-tune custom definitions. We do this with a combination of vector databases, graph databases and structured data, constructing a ‘context graph’ that captures and maintains a nuanced, interconnected view of all information,” Agarwal told VentureBeat.

Once the enterprise-specific context graph covering all data pipelines is ready, the platform uses it to auto-generate a dynamic personalized semantic layer for each user’s persona. This layer runs in the background, proactively generating recommendations within data pipelines, updating documentation and enabling the delivery of contextually relevant insights, tailored instantly to the needs of various stakeholders.

“Connecty AI applies deep context learning of disparate datasets and their connections with each object to generate comprehensive documentation and identify business metrics based on business intent. In the data preparation phase, Connecty AI will generate a dynamic semantic layer that helps automate data model generation while highlighting inconsistencies and resolving them with human feedback that further enriches the context learning. Additionally, self-service capabilities for data exploration will empower product managers to perform ad-hoc analyses independently, minimizing their reliance on technical teams and facilitating more agile, data-driven decision-making,” Agarwal explained.

The insights are delivered via ‘data agents’ which interact with users in natural language while considering their technical expertise, information access level and permissions. In essence, the founder explains, every user persona gets a customized experience that fits their role and skill set, making it easier to interact with data effectively, boosting productivity and reducing the need for extensive training.

Significant results for early partners

While a lot of companies, including startups like DataGPT and multi-billion dollar giants like Snowflake, have been promising faster access to accurate insights with large language model-powered interfaces, Connecty claims to stand out with its context graph-based approach that covers the entire stack, not just one or two platforms.

According to the company, other organizations automate data workflows by interpreting static schema but the approach falls short in production environments, where the need is to have a continuously evolving, cohesive understanding of data across systems and teams.

Currently, Connecty AI is in the pre-revenue stage, although it is working with several partner companies to further improve its product’s performance on real-world data and workflows. These include Kittl, Fiege, Mindtickle and Dept. All four organizations are running Connecty POCs in their environments and have been able to optimize data projects, reducing their teams’ work by up to 80% and accelerating the time to insights.

“Our data complexity is growing fast, and it takes longer to data prep and analyze metrics. We would wait 2-3 weeks on average to prepare data and extract actionable insights from our product usage data and merge them with transactional and marketing data. Now with Connecty AI, it’s a matter of minutes,” said Nicolas Heymann, the CEO of Kittl.

As the next step, Connecty plans to expand its context engine’s understanding capabilities by supporting additional data sources. It will also launch the product to a wider set of companies as an API service, charging them on a per-seat or usage-based pricing model.

Source link