Are you ready to bring more awareness to your brand? Consider becoming a sponsor for The AI Impact Tour. Learn more about the opportunities here.

CISOs and CIOs continue to weigh the benefits of deploying generative AI as a continuous learning engine that constantly captures behavioral, telemetry, intrusion and breach data versus the risks it creates. The goal is to achieve a new “muscle memory” of threat intelligence to help predict and stop breaches while streamlining SecOps workflows.

Trust in gen AI, however, is mixed. VentureBeat recently spoke with several CISOs across a broad spectrum of manufacturing and service industries and learned that despite the potential for productivity gains across marketing, operations and especially security, the concerns of compromised intellectual property and data confidentiality are one of the risks board members most often ask about.

Keeping pace in the weaponized arms race

Deep Instinct’s recent survey, Generative AI and Cybersecurity: Bright Future of Business Battleground? quantifies the trends VentureBeat hears in CISO interviews. The study found that while 69% of organizations have adopted generative AI tools, 46% of cybersecurity professionals feel that generative AI makes organizations more vulnerable to attacks. Eighty-eight percent of CISOs and security leaders say that weaponized AI attacks are inevitable.

Eighty-five percent believe that gen AI has likely powered recent attacks, citing the resurgence of WormGPT, a new generative AI advertised on underground forums to attackers interested in launching phishing and business email compromise attacks. Weaponized gen AI tools for sale on the dark web and over Telegram quickly become best sellers. An example is how quickly FraudGPT reached 3,000 subscriptions by July.

VB Event

The AI Impact Tour

Connect with the enterprise AI community at VentureBeat’s AI Impact Tour coming to a city near you!

Sven Krasser, chief scientist and senior vice president at CrowdStrike, told VentureBeat that attackers are speeding up efforts to weaponize large language models (LLMs) and generative AI. Krasser emphasized that cybercriminals are adopting LLM technology for phishing and malware but that “while this increases the speed and the volume of attacks that an adversary can mount, it does not significantly change the quality of attacks.”

Krasser continued, “Cloud-based security that correlates signals from across the globe using AI is also an effective defense against these new threats.” He observed that “generative AI is not pushing the bar any higher when it comes to these malicious techniques, but it is raising the average and making it easier for less skilled adversaries to be more effective.”

“Businesses must implement cyber AI for defense before offensive AI becomes mainstream. When it becomes a war of algorithms against algorithms, only autonomous response will be able to fight back at machine speeds to stop AI-augmented attacks,” said Max Heinemeyer, director of threat hunting at Darktrace.

Generative AI use cases are driving a growing market

Gen AI’s ability to constantly learn is a compelling advantage. Especially when deciphering the massive amounts of data endpoints create. Having continually updated threat assessment and risk prioritization algorithms also fuels compelling new use cases that CISOs and CIOs anticipate will improve behavior and predict threats. Ivanti’s recent partnership with Securin aims to deliver more precise and real-time risk prioritization algorithms while achieving several other key goals to strengthen its customers’ security postures.

Ivanti and Securin are collaborating to update risk prioritization algorithms by combining Securin’s Vulnerability Intelligence (VI) and Ivanti Neurons for Vulnerability Knowledge Base to provide near-real-time vulnerability threat intelligence so their customers’ security experts can expedite vulnerability assessments and prioritization. “By partnering with Securin, we are able to provide robust intelligence and risk prioritization to customers on all vulnerabilities no matter the source by using AI Augmented Human Intelligence,” said Dr. Srinivas Mukkamala, Chief Product Officer at Ivanti.

Gen AI’s many potential use cases are a compelling catalyst driving market growth, even with trust in the current generation of the technology split across the CISO community. The market value of generative AI-based cybersecurity platforms, systems and solutions is expected to rise to $11.2 billion in 2032 from $1.6 billion in 2022, a 22% CAGR. Canalys expects generative AI to support more than 70% of businesses’ cybersecurity operations within five years.

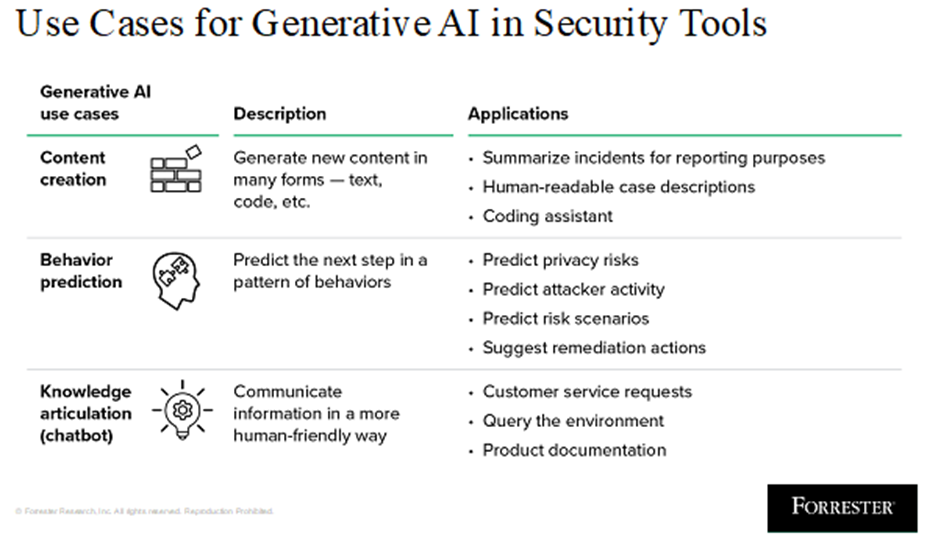

Forrester defines generative AI use cases into three categories: content creation, behavior prediction and knowledge articulation. “The use of AI and ML in security tools is not new. Almost every security tool developed over the past ten years uses ML in some form. For example, adaptive and contextual authentication has been used to build risk-scoring logic based on heuristic rules and naive Bayesian classification and logistic regression analytics,” writes Forrester Principal Analyst Allie Mellen.

Generative AI needs to flex and adapt to each business differently

How CISOs and CIOs advise their boards on balancing the risks and benefits of generative AI will define the technology’s future for years to come. Gartner predicts that 80% of applications will include generative AI capabilities by 2026, an adoption rate setting a precedent already in most organizations.

CISOs who say they’re getting the most value from the first generation of gen AI apps say that how adaptable a platform or app is to how their teams work is key. That extends to how gen AI-based technologies can support and strengthen the broader zero-trust security frameworks they’re in the process of building.

Here are the use cases and guidance from CISOs piloting gen AI and where they expect to see the greatest value:

Taking a zero-trust approach to every interaction with generative AI tools, apps, platforms and endpoints is a must-have for any CISO’s playbook. This must include continuous monitoring, dynamic access controls, and always-on verification of users, devices and the data they use at rest and in transit.

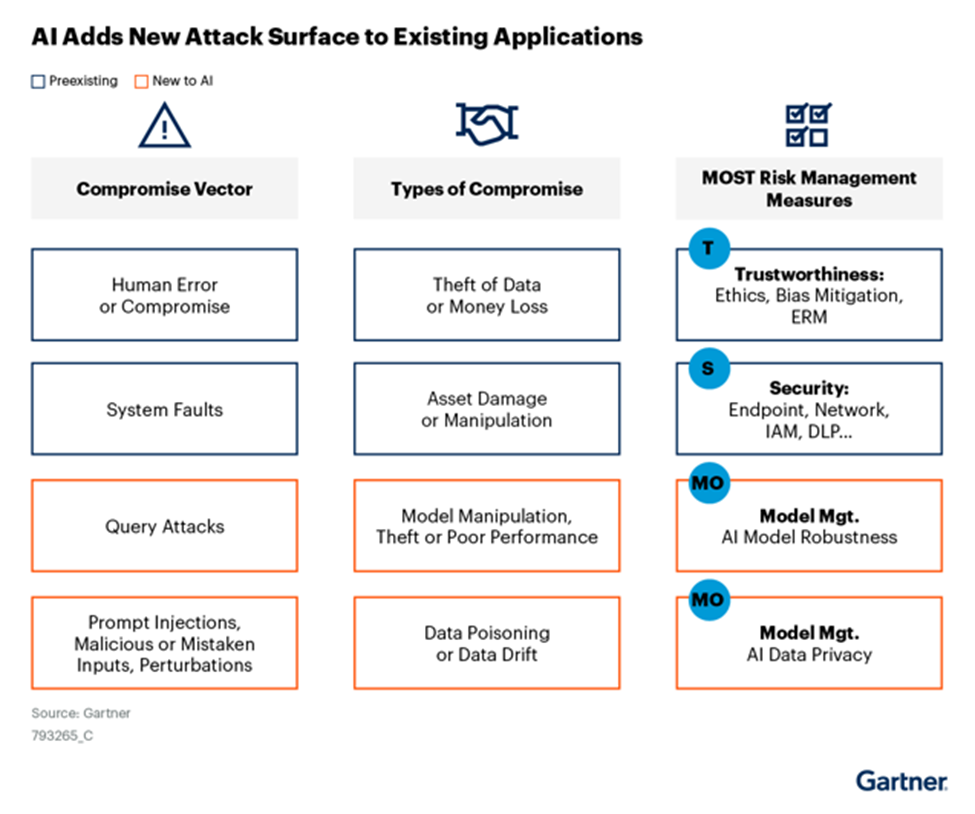

CISOs are most worried about how generative AI will bring new attack vectors they’re unprepared to protect against. For enterprises building LLMs, protecting against query attacks, prompt injections, model manipulation and data poisoning are priorities.

To harden infrastructure for the next generation of attack surfaces, CISOs and their teams are doubling down on zero trust. Source: Key Impacts of Generative AI on CISO, Gartner

Managing knowledge with gen AI

The most popular use case is using gen AI to manage knowledge across security teams and for large-scale enterprises as a substitute for more expensive and lengthy system integration projects. ChatGPT-based copilots dominated RSAC 2023 this year. Google Security AI Workbench, Microsoft Security Copilot (launched before the show), Recorded Future, Security Scorecard and SentinelOne were among the vendors launching ChatGPT solutions.

Ivanti has taken a leadership role in this area, given the insight they have into their customers’ IT Service Management (ITSM), cybersecurity and network security requirements. They’re offering a webinar on the topic, How to Transform IT Service Management with Generative AI which features Susan Fung, principal product manager, AL/ML at Ivanti.

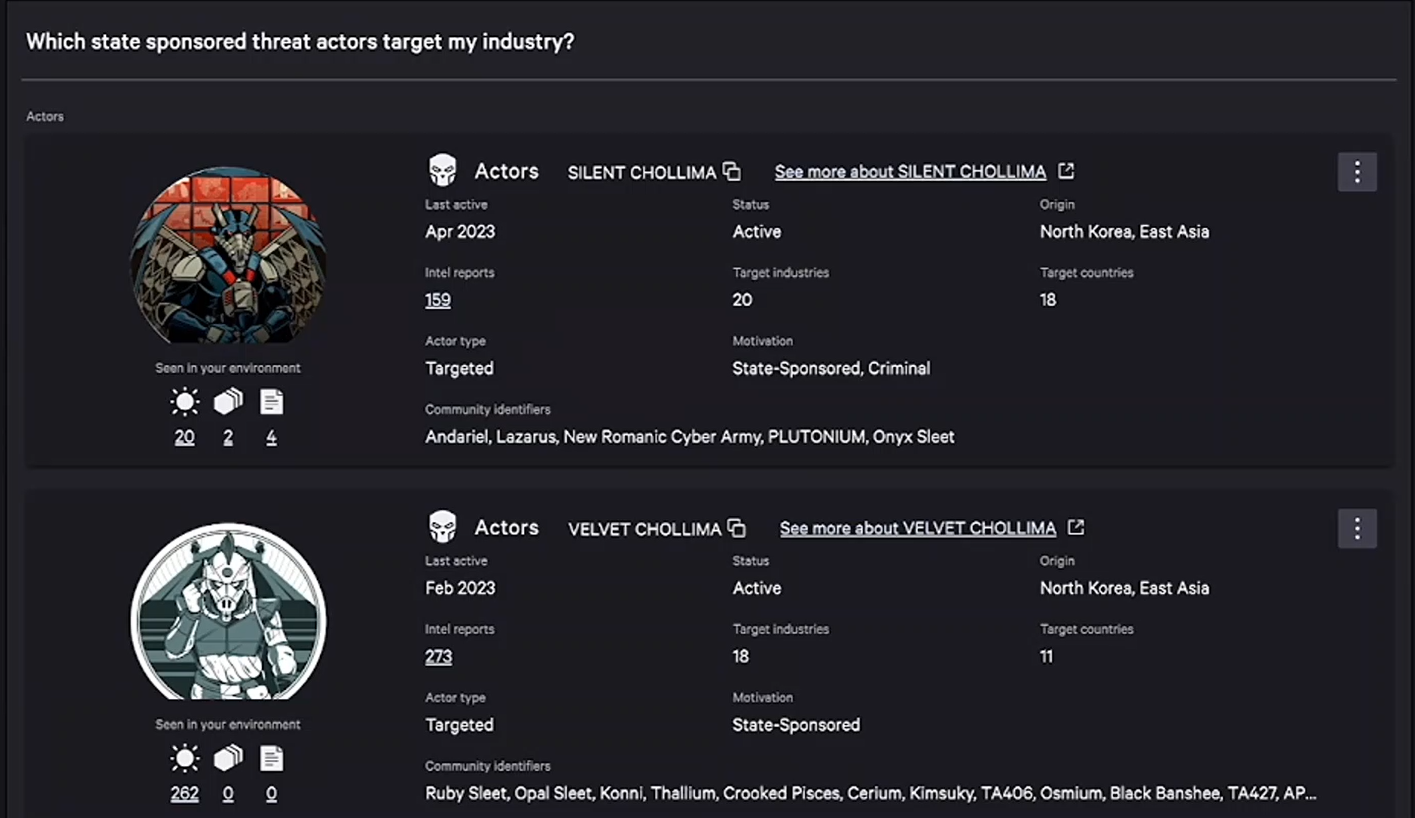

Earlier this year at CrowdStrike Fal.Con 2023, the cybersecurity provider made twelve new announcements at their annual event. Charlotte AI brings the power of conversational AI to the Falcon platform to accelerate threat detection, investigation and response through natural language interactions. Charlotte AI generates an LLM-powered incident summary to help security analysts save time analyzing breaches.

Charlotte AI will be released to all CrowdStrike Falcon customers over the next year, with initial upgrades starting in late September 2023 on the Raptor platform. Raj Rajamani, CrowdStrike’s chief product officer, says that Charlotte AI helps make security analysts “two or three times more productive” by automating repetitive tasks. Rajamani explained to VentureBeat that CrowdStrike has invested heavily in its graph database architecture to fuel Charlotte’s capabilities across endpoints, cloud and identities.

Working behind the scenes, Charlotte AI displays current and past conversations and questions, iterating on them in real-time to track threat actors and potential threats using generative AI. Source: CrowdStrike Fal.Con 2023

Identifying and fixing cloud configuration errors

Cloud exploitation attacks grew 95% year-over-year as attackers constantly work to improve their tradecraft and breach cloud misconfigurations. It’s one of the fastest-growing threat surfaces enterprises need to defend against.

VentureBeat predicts that 2024 will see mergers, acquisitions, and more joint ventures aimed at closing multi-cloud and hybrid cloud security gaps. CrowdStrike’s acquisition of Bionic earlier this year is just the beginning of a broader trend aimed at helping organizations strengthen their application security and posture management. Previous acquisitions aimed at improving cloud security include Microsoft acquiring CloudKnox Security, CyberArk acquiring C3M, Snyk acquiring Fugue, and Rubrik acquiring Laminar.

The acquisition also helps strengthen CloudStrikes’ ability to sell consolidated cloud-native security on a unified platform. Bionic is a strong fit for CrowdStrikes’ customer base of cloud-first organizations. It reflects how acquisitions will be used to strengthen gen AI’s potential in cybersecurity further.

VentureBeat’s mission is to be a digital town square for technical decision-makers to gain knowledge about transformative enterprise technology and transact. Discover our Briefings.