Are you ready to bring more awareness to your brand? Consider becoming a sponsor for The AI Impact Tour. Learn more about the opportunities here.

Realizing that generative AI development needs a hardened security framework with buy-in across the industry to make it more trusted and reduce the risks of attacks, Meta last week launched the Purple Llama initiative. Purple Llama combines offensive (red team) and defensive (blue team) strategies, drawing inspiration from the cybersecurity concept of purple teaming.

Defining Purple Teaming

The Purple Llama initiative combines offensive (red team) and defensive (blue team) strategies to evaluate, identify, reduce, or eliminate potential risks. Purple teaming got its name because purple represents blending offensive and defensive strategies postures. Meta choosing the term for its initiative underscores how core the tasks of combining attack and defense strategies are to ensure all AI systems’ safety and reliability.

Why Meta launched the Purple Llama Initiative now

“Purple Llama is a very welcome addition from Meta. On the heels of joining the IBM AI alliance, which is only at a talking level to promote trust, safety, and governance of AI models, Meta has taken the first step in releasing a set of tools and frameworks ahead of the work produced by the committee even before their team is finalized,” Andy Thurai, vice president and principal analyst of Constellation Research Inc. told VentureBeat in a recent interview.

In the announcement blog post, Meta observes that “as generative AI powers a wave of innovation, including conversational chatbots, image generators, and document summarization tools, Meta seeks to encourage collaboration on AI safety and build trust in these new technologies.”

VB Event

The AI Impact Tour

Connect with the enterprise AI community at VentureBeat’s AI Impact Tour coming to a city near you!

The initiative signals a new era in safe, responsible gen AI development in how well orchestrated it is across the Al community and the depth of benchmarks, guidelines and tools included. One of Meta’s primary goals in creating the initiative is to provide tools to help gen AI developers reduce the risks defined in White House commitments on developing responsible AI.

Meta kicked off the initiative by releasing CyberSec Eval, a comprehensive set of cybersecurity safety evaluation benchmarks for evaluating large language models (LLMs), and Llama Guard, a safety classifier for input/output filtering that Meta has optimized for broad deployment. Meta also released its Responsible Use Guide, which provides a series of best practices for implementing the framework.

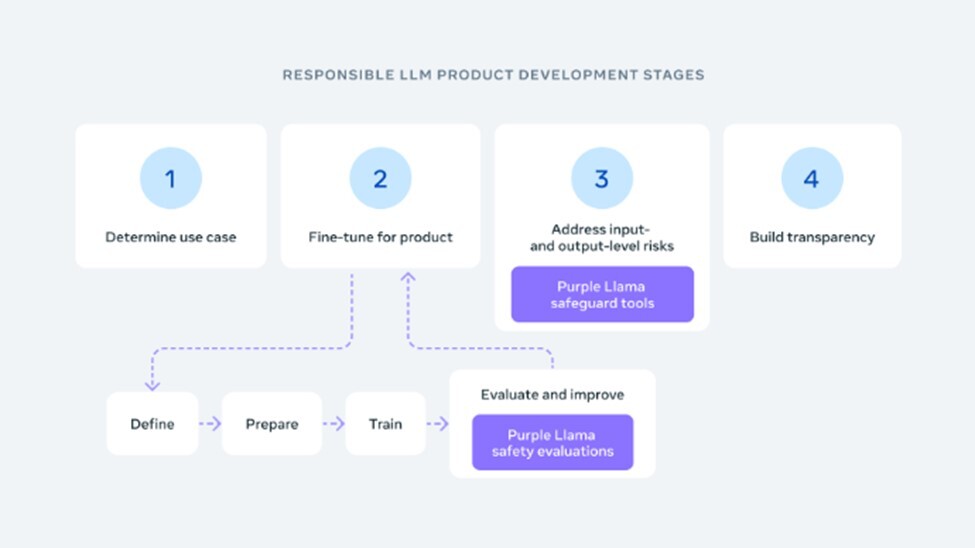

The Purple Llama Initiatives’ framework for responsible LLM product development reflects the lessons learned on where and how to apply safeguard tools. Source: Meta

Meta gets the win for uniting competitors to improve AI security

Cross-collaboration is core to Meta’s approach to AI development, based on creating as open of an ecosystem as possible. That’s a challenging goal to achieve when so many competing companies are being asked to collaborate for the common good of increasing AI safety and security.

Further signaling a new era in safe, responsible gen AI development is how Meta was able to successfully gain the cooperation of the recently announced AI Alliance, AMD, AWS, Google Cloud, Hugging Face, IBM, Intel, Lightning AI, Microsoft, MLCommons, NVIDIA, Scale AI and many others to improve and make those tools available to the open source community.

“A noteworthy item to mention is the list of companies they want to work with outside of the alliance – AWS, Google, Microsoft, NVIDIA – all the top AI A players missing in the original alliance,” Thurai told VentureBeat.

Meta has a successful track record of uniting partners to a common goal. In July, they launched Llama 2 with more than 100 partners, and many of them are now partnering with Meta on open trust and safety. The company is also hosting a workshop at NeurIPS 2023 to share these tools and provide a technical deep dive.

Enterprises and the CIOs, CISOs, and CEOs leading them need to see this level of cooperation and collaboration to trust gen AI and invest DevOps dollars and people to build and move models into production. By showing that competitors can collaborate for a common goal that benefits everyone, Meta and the partners involved have an opportunity to increase the credibility of all their solutions. Sales, like trust, are earned with consistency over time.

A good first step, but more is needed

“The proposed tool set is supposed to help LLM producers qualify with metrics about LLM security risks, insecure code output evaluation, and/or potentially limit the output from aiding bad actors to use these open source LLMs for malicious purposes for cyberattacks. A good first step, I would like to see a lot more,” Thurai advises.

VentureBeat’s mission is to be a digital town square for technical decision-makers to gain knowledge about transformative enterprise technology and transact. Discover our Briefings.